Introduction

In computer science, concurrency is the process of performing multiple tasks at the same time. The iOS framework has several APIs for concurrency, such as Grand Central Dispatch (GCD), NSOperationQueue, and NSThread. As you may be aware, multithreading is an execution paradigm in which numerous threads can run concurrently on a single CPU core. The operating system allocates tiny chunks of computation time to each thread and switches between them. If more CPU cores are available, multiple threads can run in parallel. Because of the power of multithreading, the overall time required by many operations can be greatly decreased.

In this post, we will discuss concurrency and multithreading in iOS. We’ll start with what it was like to work with completion handlers before Swift 5.5 supported async-await statements. We’ll also explore some of the challenges of async-await and how to solve them.

Completion Handlers

Before Swift had async-await, developers solved concurrency problems by using completion handlers. A completion handler is a callback function that sends back return values of a length running function, such as network calls or heavy computations.

To demonstrate how completion handlers function, you can check the following example. Assume you have a large amount of transaction data, and you want to know how many transactions are on average. First, you will use fetchTransactionHistory to return an array of Double representing all transaction amounts. The calculateAverageTransaction function takes the transaction amount given by fetchTransactionHistory and converts it to a Double that shows the average amount of all transaction data. Finally, uploadAverageTransaction will upload the average transaction amount to some destination and return a String "OK" indicating that the upload data was successful. Here is a mockup sample code:

import Foundation

func fetchTransactionHistory(completion: @escaping ([Double]) -> Void) {

// Complex networking code here; let say we send back random transaction

DispatchQueue.global().async {

let results = (1...100_000).map { _ in Double.random(in: 1...999) }

completion(results)

}

}

func calculateAverageTransaction(for records: [Double], completion: @escaping (Double) -> Void) {

// Calculate average of transaction history

DispatchQueue.global().async {

let total = records.reduce(0, +)

let average = total / Double(records.count)

completion(average)

}

}

func uploadAverageTransaction(result: Double, completion: @escaping (String) -> Void) {

// We need to send back average transaction result to the server and show "OK"

DispatchQueue.global().async {

completion("OK")

}

}

Figure 1: Sample code of asynchronous function using completion handlers

Let’s say that to upload the average transaction, you need to calculate the average transaction value from the transaction history. Hence, you need to chain the function (fetch the transaction history, calculate the average, and upload the result). This will result in “callback hell.”

Here’s what “callback hell” looks like:

// 1. This statement will be executed first

// Some function to setup UI

// 2. Our asynchronous function is executed

fetchTransactionHistory { records in

// 4. Records are returned, execute calculateAverageTransaction

calculateAverageTransaction(for: records) { average in

// 5. Average is returned, execute showAverageTransaction

uploadAverageTransaction(result: average) { response in

// 6. Lastly, print server response

print("Server response: \(response)")

}

}

}

// 3. Another statement is executed, we still wait for fetchTransactionHistory

// Some other function

Figure 2: Chaining function call of completion handlers resulting in “callback hell”

There are several problem that can occur with completion handlers:

- The functions may call their completion handler more than once, or, even worse, will forget to call it at all.

- The

@escaping (xxx) -> Voidsyntax is not familiar to human language. - You need to use weak references to avoid retain cycles or memory leaks.

- The more completion handlers you chain in a function, the greater the chance that you’ll end up with a "pyramid of doom" or “callback hell,” where the code will increasingly indent to accommodate each callback.

- It was difficult to resolve back errors with completion handlers until Swift 5.0 introduced the

Resulttype.

Async-Await

At WWDC 2021, Apple introduced async-await as part of Swift 5.5’s new structured concurrency. With this new async function and await statement, you can define asynchronous function calls clearly. Before we look at how async-await solved completion handler problems, let's discuss what they actually are.

Async

async is an asynchronous function attribute, indicating that the function does asynchronous tasks.

Here is an example of fetchTransactionHistory written using async:

func fetchTransactionHistory() async throws -> [Double] {

// Complex networking code here; let say we send back random transaction

DispatchQueue.global().async {

let results = (1...100_000).map { _ in Double.random(in: 1...999) }

return results

}

}

Figure 3: Sample code of asynchronous function using new async statement

The fetchTransactionHistory function is more natural to read than when it was written using completion handlers. It is now an async throwing method, which implies that it performs failable asynchronous works. If something goes wrong (when executing the function), it will throw an error, and it will return a list of transaction amounts (in Double) if the function is running well.

Await

To invoke an async function, use await statements. Put simply, the await statement is a statement used when awaiting the callback from the async function.

If you only want to call the fetchTransactionHistory, the code will look like this:

do {

let transactionList = try await fetchTransactionHistory()

print("Fetched \(transactionList.count) transactions.")

} catch {

print("Fetching transactions failed with error \(error)")

}

Figure 4: Implementation of await statement to call async function

The above code calls the asynchronous function fetchTransactionHistory. Using the await statement, you tell it to await for the result of length tasks performed by fetchTransactionHistory and only continue to the next step when the result is available. This result could be a list of transactions or errors, if something went wrong.

Structured Concurrency

Look back at Figure 2, which shows the chaining function you need to call an asynchronous function using completion handlers. There, the code is called unstructured concurrency. In that example, we called the fetchTransactionHistory function between another code that was continually running on the same thread (in this case, the main thread). You never know when your asynchronous function returns its value, but you handle it inside the callback. The problem with unstructured concurrency is that you don’t know when the asynchronous function gives its result back, and sometimes it doesn’t return anything.

async-await allows you to use structured concurrency to handle the code order of execution. With structured concurrency, an asynchronous function will execute linearly (step by step), without going back and forth to handle its callback.

This is how you rewrite the chaining function call using structured concurrency:

do {

// 1. Call the fetchTransactionHistory function first

let transactionList = try await fetchTransactionHistory()

// 2. fetchTransactionHistory function returns

// 3. Call the calculateAverageTransaction function

let avgTrx = try await calculateAverageTransaction(transactionList)

// 4. calculateAverageTransaction function returns

// 5. Call the uploadAverageTransaction function

let serverResponse = try await uploadAverageTransaction(avgTrx)

// 6. uploadAverageTransaction function returns

print("Server response: \(serverResponse)")

} catch {

print("Fetching transactions failed with error \(error)")

}

// 7. Resume execution of another statement here

Figure 5: Sample implementation of chaining asynchronous function using structured concurrency

Now, your code order of execution is linear, and it is easier to understand the flow of the code. By modifying your asynchronous code to use async-await and structured concurrency, it will be easier to read the code and debug complex business logic.

Async Let

While you can benefit from structured concurrency using async-await to linearly execute asynchronous functions, sometimes you need to call it in parallel. Let's say that to save time by using all available resources, you want to call uploadAverageTransaction several times parallely.

Here’s how to do this with async-await:

do {

let serverResponse1 = try await uploadAverageTransaction(avgTrx1)

let serverResponse2 = try await uploadAverageTransaction(avgTrx2)

let serverResponse3 = try await uploadAverageTransaction(avgTrx3)

} catch {

print("Fetching transactions failed with error \(error)")

}

Figure 6: Linear execution of async-await functions

This code will produced:

Finished upload average transaction 1 with response OK

Finished upload average transaction 2 with response OK

Finished upload average transaction 3 with response OK

Figure 7: Execution result of linear async-await functions

You can take advantage of async-let to call these functions parallely because the order of success uploading the transaction functions doesn't matter.

do {

async let serverResponse1 = uploadAverageTransaction(avgTrx1)

async let serverResponse2 = uploadAverageTransaction(avgTrx2)

async let serverResponse3 = uploadAverageTransaction(avgTrx3)

} catch {

print("Fetching transactions failed with error \(error)")

}

Figure 8: Parallel execution of functions using async-let

Now, the outcome of the code above will be the asynchronous function that returned faster, based on resource availability and execution time:

Finished upload average transaction 3 with response OK

Finished upload average transaction 1 with response OK

Finished upload average transaction 2 with response OK

Figure 9: Execution result of parallel functions call using async-let

Challenges with Concurrency

Concurrency/multithreading is a powerful technique that comes with many challenges, including race condition and deadlock. These two issues are related to accessing the same shared resources.

Race Condition

A race condition happens when a system attempts to perform two or more operations on the same resources at the same time. Critical race conditions frequently occur when tasks or threads rely on the same shared state.

To illustrate, take a look at TransactionManager class. We use this class to manage all transaction related function, such as update transaction status, and fetch transaction. TransactionManager will work in multi-thread environment, so we will use asyncronous function to it. Imagine you want to create a digital banking app that users can use to check their account balances and transfer money to other users. One of the app's main features is that users can install it on more than one device. The app must ensure that the user has sufficient funds before transferring money to another user. Otherwise, the app must bear the loss.

This is how TransactionManager code will look:

struct Transaction {

var id = 0

var status = "PENDING"

var amount = 0

}

class TransactionManager {

private var transactionList = [Int: Transaction]()

private let queue = DispatchQueue(label: "transaction.queue")

func updateTransaction(_ transaction: Transaction) {

queue.async {

self.transactionList[transaction.id] = transaction

}

}

func fetchTransaction(withID id: Int,

handler: @escaping (Transaction?) -> Void) {

queue.async {

handler(self.transactionList[id])

}

}

}

Figure 10: Sample of race condition code

The above implementation is working as expected, but when you try to update a transaction, and try to fetch it at the same time, data race condition may happen. Take a look at the following example:

let manager = TransactionManager()

let transaction = Transaction(id: 8, status: "PENDING", amount: 100)

func tryRaceCondition() {

let updatedTransaction = Transaction(id: 8, status: "FAILED", amount: 100)

manager.updateTransaction(updatedTransaction)

manager.fetchTransaction(withId: 8) { transaction in

print(transaction)

// This code might print Transaction(id: 8, status: "PENDING", amount: 100)

// but we already update the status?

}

}

The above example may produce data race conditions, where the printed transaction status is “PENDING”, while we have already updated it in the previous line. The asyncronous function do not promise us that the updateTransaction is successfuly running and data is updated when we call fetchTransaction function. In some cases, updating data require longer time, thus the transaction data returned in fetchTransaction is the data before being proccess with updated value.

To solve race condition, actor can be used to prevent data is accessed by more than one resources by ensuring synchronized access to data (serialized access for properties and method). You can use locks to achieve the same behavior, but with actor, the Swift standard library hides all functionalities related to synchronizing access as an implementation detail.

Here’s how to rewrite the code using actor:

actor TransactionManager {

private var transactionList = [Int: Transaction]()

func updateTransaction(_ transaction: Transaction) {

transactionList[transaction.id] = transaction

}

func fetchTransaction(withID id: Int) -> Transaction? {

transactionList[id]

}

}

Figure 11: Using actor to solve race condition

As you can see, we do not need to handle multi-threading and dispatch method because actor force the caller of this function to use await function. This allows the Swift compiler to handle the underlying lock mechanism and synchronization process, so you don’t need to do anything else related to shared data access. Now if we call tryRaceCondition() function, it will print the updated transaction with “FAILED” status.

let manager = TransactionManager()

let transaction = Transaction(id: 8, status: "PENDING", amount: 100)

func tryRaceCondition() async {

let updatedTransaction = Transaction(id: 8, status: "FAILED", amount: 100)

await manager.updateTransaction(updatedTransaction)

let transaction = await manager.fetchTransaction(withID: 8)

print(transaction)

// Transaction(id: 8, status: "FAILED", amount: 100)

}

Deadlock

A deadlock is when each member of a queue is waiting for another member, including itself, to be executed (sending a message or, more typically, releasing a lock). In simple terms, a deadlock occurs on a system when there is a waiting time for resources to become accessible; meanwhile, it is logically impossible for them to be available.

One example of producing deadlock is when you run a lot of tasks to a concurrent queue and it is blocked because of a synchronous function or because no resources are available.

This is a sample code that will produce deadlock:

let queue = DispatchQueue(label: "my-queue")

queue.sync {

print("print this")

queue.sync {

print("deadlocked")

}

}

Figure 12: Example of code producing deadlock

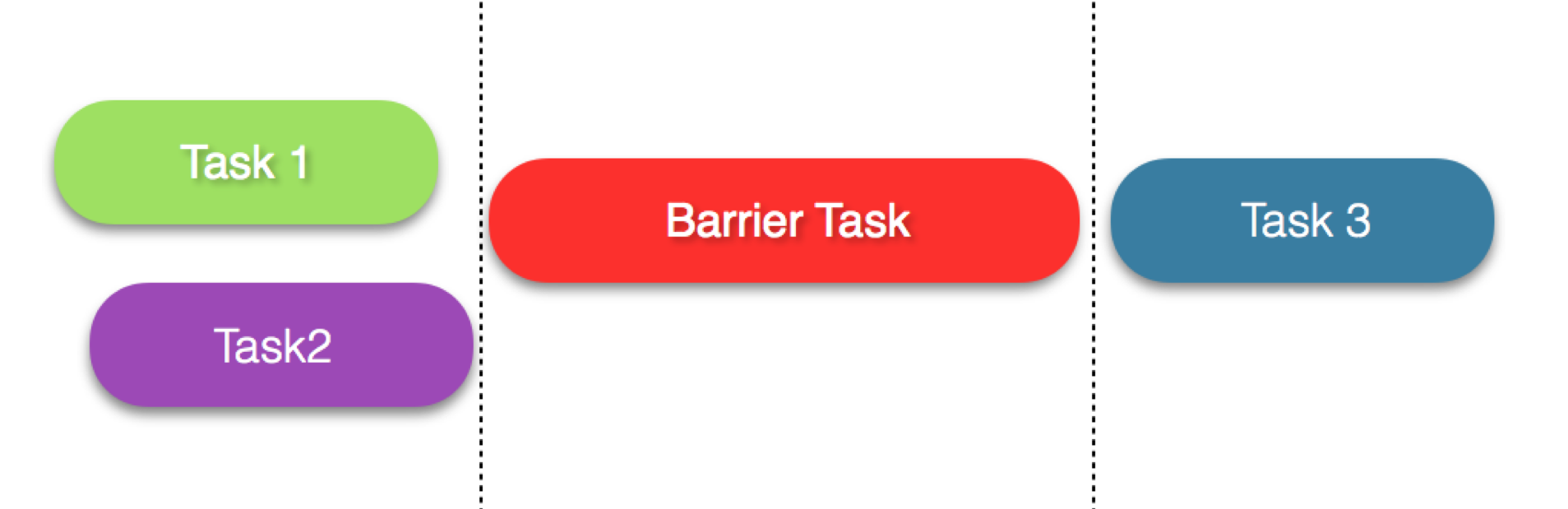

There are several thread safety mechanisms that can be used to solve deadlock problems, including Dispatch Barrier. You can use Dispatch Barrier to block serial queues (to create a bottleneck), so it will make sure no other tasks are being executed while running the barrier task. Once the barrier task is finished, Dispatch Barrier will release the queue of other serial tasks, making them available to be executed. This may seem to slow down the process. However, in some cases, you need to limit large amounts of asynchronous tasks to make sure resources are still available in order to avoid deadlock.

This is sample code for implementing Dispatch Barrier:

let queue = DispatchQueue(label: "my-queue")

queue.async(flags: .barrier) {

print("print this")

queue.async(flags: .barrier) {

print("no more deadlock")

}

}

Figure 13: Solving deadlock using Dispatch Barrier

Another thread-safety approach to solving deadlock issues is to use DispatchSemaphore or NSLock.

Figure 14: Visualization of barrier task in Dispatch Barrier. (Source: https://basememara.com)

Conclusion

Because of its complexity, debugging code that uses concurrent programming is difficult. Modern IDE already provides us with a debugger. However, when it is related to concurrency or multithreading, it only gives us a collection of threads and some information about the thread’s memory state. If we understand the low-level machine code, this can be useful. But most of the time, we only care about the ability to trace back the steps of our code in different threads.

To help debugging concurrent code, you need a good logging mechanism. Sometimes it is hard to find which code is causing concurrency problem, especially when the application is running. You can deduct what is wrong with the code by logging status information at some point in our program and observing it. Shipbook helps with this by giving you the power to remotely gather, search, and analyze your user mobile-app logs—and even crashes in the cloud—on a per-user and session basis. This will allow you to easily analyze relevant data related to concurrent functions in your app and catch bugs that no one found in the testing phase.