Software developers have always relied on logs as a fundamental tool for understanding what happens inside running systems. Logs capture reality: the sequence of events, the state of the system at a given moment, errors that occurred, and the context in which everything happened.

As AI agents increasingly participate in writing, modifying, and maintaining code, it may be tempting to think that logs will become less important — or even obsolete. In practice, the opposite is true. Logs are becoming more critical than ever. The difference is who will primarily consume them, and how they need to be structured.

This post explores why logs remain essential in the age of AI agents, how the nature of logging is likely to change, and what this means for modern development platforms.

AI Makes Mistakes — Just Like Humans

To understand the future role of logs, we need to start with a realistic understanding of how large language models (LLMs) work.

LLMs do not reason about code in the same way compilers, interpreters, or formal verification systems do. They generate output by predicting the most likely next token based on vast amounts of training data. This makes them extremely powerful pattern generators — but not infallible problem solvers.

As a result:

- LLMs make mistakes, sometimes obvious and sometimes subtle.

- They can produce code that looks correct but fails under real-world conditions.

- They are prone to hallucinations — confidently generating incorrect logic, APIs that don’t exist, or assumptions that are not grounded in reality.

- They often lack awareness of runtime behavior, concurrency issues, environmental differences, or system-specific edge cases.

Let’s look at a few concrete examples.

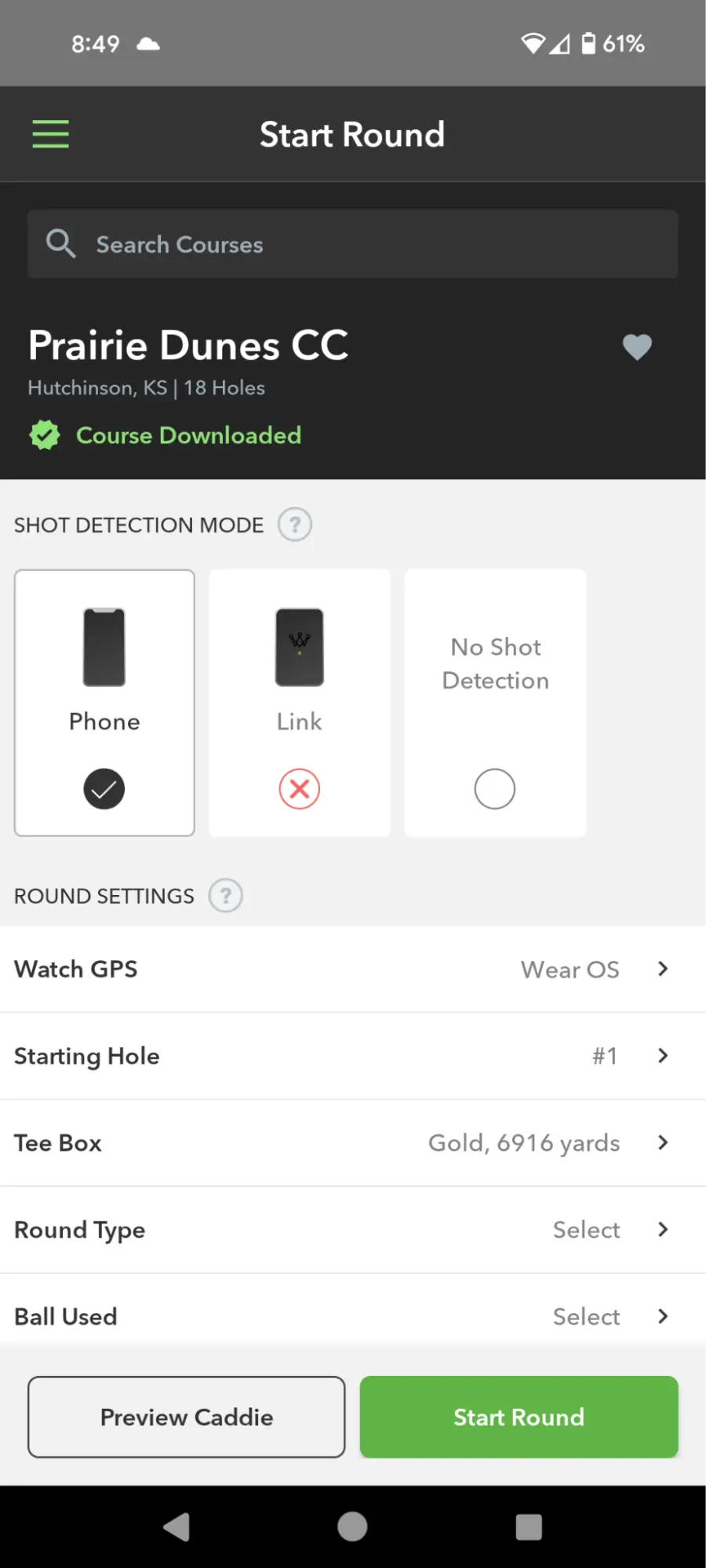

Example 1: Android Logging Gone Wrong

Imagine an AI agent generating Android code to log network responses:

Log.d("Network", "Response: " + response.body().string())

At first glance, this looks fine. But in practice, calling response.body().string() consumes the response stream. If the same response is later needed for parsing JSON, the app will crash or behave unpredictably. Both human developers and AI models can overlook this subtle side effect during implementation or testing.

Proper logging would look like this:

val bodyString = response.peekBody(Long.MAX_VALUE).string()

Log.d("Network", "Response: $bodyString")

However, even with this fixed version, there’s still an occasional issue where it can run into memory problems, especially when processing extremely large responses or values that approach the system’s maximum capacity.

This exactly shows the complexity of code. Without logs showing the crash or missing data, the AI agent would have no feedback that its generated code caused a runtime issue.

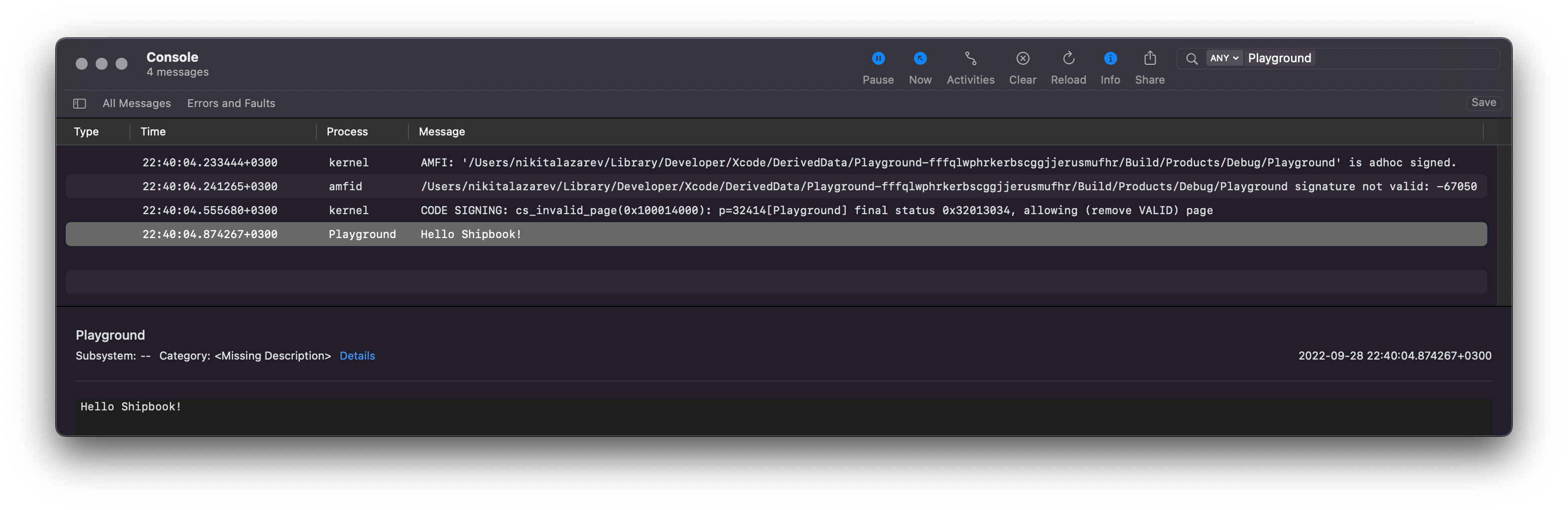

Example 2: iOS threading Issues

Consider an AI model generating Swift code for updating the UI after a background network call:

URLSession.shared.dataTask(with: url) { data, response, error in

if let data = data {

self.statusLabel.text = "Loaded \(data.count) bytes"

}

}.resume()

This code compiles and may even work sometimes, but it violates UIKit’s rule that UI updates must occur on the main thread. The result could be random crashes or UI glitches.

A correct version would wrap the UI update in a DispatchQueue.main.async block:

DispatchQueue.main.async {

self.statusLabel.text = "Loaded \(data.count) bytes"

}

Logs capturing the crash or warning from the runtime would be the only reliable signal for the AI agent to detect and correct this mistake.

Example 3: Hallucinated APIs

LLMs sometimes invent REST APIs that don’t exist — something that might only become apparent once the product is running in production. For example, an AI might generate code that calls a fictional endpoint:

val response = Http.post("https://api.myapp.com/v2/user/trackEvent", event.toJson())

if (response.isSuccessful) {

Log.i("Analytics", "Event sent successfully")

}

If that /v2/user/trackEvent endpoint was never implemented, the code will compile and even deploy, but the system will start logging 404 errors or timeouts once it’s live. Those logs are the only signal — for both humans and AI agents — that the generated API was imaginary and needs correction.

These limitations are not bugs; they are intrinsic to how current models operate. Even as models improve, it is unrealistic to expect near-future AI-generated code to be consistently perfect in production environments.

This is precisely where logs remain indispensable.

Logs as the Source of Truth

When something goes wrong in production, developers don’t rely on intentions, comments, or assumptions — they rely on evidence. Logs provide that evidence.

The same applies to AI agents.

Regardless of whether code is written by a human or generated by an AI, runtime behavior is the ultimate arbiter of correctness. Logs record what actually happened, not what was expected to happen.

They answer questions such as:

- What sequence of events led to this state?

- What inputs did the system receive?

- Which branch of logic was executed?

- What errors occurred, and in what context?

- How did external dependencies respond?

Without logs, both humans and AI agents are left guessing.

Why AI Agents Need Logs

As AI agents increasingly participate in development workflows — generating code, refactoring systems, fixing bugs, and even deploying changes — logs become a critical feedback mechanism.

Logs Close the Feedback Loop

AI agents operate on predictions. Logs provide feedback from reality.

By analyzing logs, an AI agent can:

- Validate whether generated code behaved as intended

- Detect mismatches between expected and actual outcomes

- Identify patterns that indicate bugs or regressions

- Learn from failures in real production conditions

Without logs, AI agents have no reliable way to distinguish correct behavior from silent failure.

Logs Enable Root Cause Analysis

When failures occur, understanding why they happened requires context. Logs provide structured breadcrumbs that allow both humans and AI to trace causality across components, services, and time.

As AI systems take on more responsibility, automated root cause analysis will increasingly depend on rich, well-structured logs.

How Code Will Be Built in the Future

The rise of AI agents is not just changing how code is written — it is changing how software systems evolve over time.

We are moving toward a world where:

- AI agents generate and modify large portions of code

- Humans supervise, review, and guide rather than author every line

- Systems are continuously adjusted based on runtime feedback

- Debugging and remediation are increasingly automated

In such an environment, logs are no longer just a debugging tool. They become a primary interface between running systems and intelligent agents.

Think of logs as a bidirectional communication channel: they allow AI agents to observe system behavior in real-time, understand what's happening across distributed components, and make informed decisions about modifications and fixes. Just as APIs define how different services communicate, logs define how AI agents perceive and interact with running software. An AI agent monitoring logs can detect anomalies, correlate events across services, identify patterns that indicate potential issues, and even trigger automated responses — all without direct human intervention. This transforms logs from passive historical records into an active, queryable representation of system state that enables autonomous decision-making.

Logs May No Longer Be Written for Humans

One of the most significant shifts ahead is that logs may no longer need to be primarily human-readable.

Historically, logs were formatted for developers reading them line by line: timestamps, severity levels, free-form text messages, and stack traces.

But if the primary consumer of logs is an AI agent, the requirements shift fundamentally. Instead of human-readable prose, logs must become structured data streams that machines can parse, analyze, and reason about. Rather than a developer scanning through text messages, an AI agent needs programmatic access to event data with clear schemas, explicit context, and traceable relationships between events. The format matters less than the ability for algorithms to extract meaning, detect patterns, and make decisions based on what actually happened — not what a human thought was worth writing down.

Human readability becomes secondary. Humans may still access logs — but often through AI-generated summaries, explanations, and insights rather than raw log lines.

Logs as Active Participants in Autonomous Systems

As observability evolves, logs will increasingly move from passive storage to active participation in system behavior. Today, logs are primarily archives — repositories of what happened, consulted after the fact. Tomorrow, they will be real-time inputs that directly influence system actions.

We can already see early signs of this transformation:

- Logs triggering alerts and automated workflows — when an error pattern appears, systems can automatically scale resources, restart services, or notify teams

- Logs feeding anomaly detection systems — machine learning models analyze log streams to identify deviations from normal behavior before they escalate

- Logs being correlated with metrics and traces — combining different signals to build comprehensive views of system health

- Logs used to gate deployments or rollbacks — automated systems evaluate log patterns to decide whether a new release should proceed or be reverted

In AI-driven systems, this trend accelerates dramatically. Logs become the substrate on which autonomous decision-making is built. Instead of simply reacting to alerts, AI agents can proactively analyze log patterns, predict issues before they manifest, and autonomously implement fixes. An AI agent might notice subtle degradation patterns in logs, correlate them with recent code changes, generate a targeted fix, test it against historical log data, and deploy it — all without human intervention. In this model, logs aren't just records of the past; they're the sensory input that enables systems to observe, reason, and act autonomously.

Shipbook and the AI Age of Logging

At Shipbook, we believe logs are not going away — they are evolving.

Shipbook was built to give developers deep visibility into real-world application behavior, with features like:

- Powerful search and filtering

- Session-based log grouping

- Proactive classification in loglytics

But we take this even further with our Shipbook MCP Server. By implementing the Model Context Protocol, we allow AI agents to directly connect to your Shipbook account. This means your AI assistant can now search, filter, and analyze real-time production logs to help you debug issues faster and more accurately.

But we are also looking ahead.

We are actively developing capabilities that prepare logs for the AI age: logs that are easier for machines to interpret, analyze, and reason about — while still remaining useful for human developers.

As AI agents become first-class participants in software development, logs won't just be a debugging tool — they'll be the trusted interface that enables intelligent systems to understand, learn from, and improve the code they generate. That's the future we're building at Shipbook: logs that power both human insight and AI autonomy.

Ready to prepare your logging infrastructure for the AI age? Shipbook gives you the power to remotely gather, search, and analyze your user logs and exceptions in the cloud, on a per-user & session basis. Start building logs that work for both your team today and the AI agents of tomorrow.

(Image credits:

(Image credits: