Chapter 1. Introduction to Dependency Injection (DI)

Dependency Injection (DI) is a software design pattern commonly used in object-oriented programming and particularly prevalent in Android app development. It's a fundamental concept that aims to decouple classes from their dependencies, making them more modular, testable, and maintainable.

What is Dependency Injection?

Dependency Injection is a technique where the dependencies of a class are provided from outside the class rather than being created internally. In simpler terms, instead of a class creating its own dependencies, they are "injected" into the class from an external source.

Why Dependency Injection?

The primary motivation behind using Dependency Injection is to improve the modularity and flexibility of software components. By decoupling classes from their dependencies, DI makes it easier to replace, extend, and test individual components without affecting the rest of the system.

Key Concepts of Dependency Injection

-

Inversion of Control (IoC)

Dependency Injection is often associated with the concept of Inversion of Control (IoC), where the control of object creation and lifecycle management is inverted from the class itself to an external entity, typically a framework or container. IoC containers, such as Dagger or Hilt in Android, manage the instantiation and dependency resolution of classes, reducing the coupling between components.

-

Dependency Inversion Principle (DIP)

Dependency Injection follows the Dependency Inversion Principle, a key tenet of object-oriented design, which states that high-level modules should not depend on low-level modules but rather both should depend on abstractions. DI allows dependencies to be defined by interfaces or abstract classes, promoting loose coupling between components and facilitating easier substitution of implementations.

Benefits of Dependency Injection

Improved Testability

DI simplifies the process of testing by allowing dependencies to be easily mocked or replaced with test doubles. Components can be tested in isolation, leading to more reliable and maintainable unit tests.

Modular Design

DI promotes a modular architecture by reducing the tight coupling between classes. Components become more reusable and interchangeable, leading to a more flexible and scalable codebase.

Simplified Dependency Management

By centralizing the management of dependencies, DI frameworks handle the instantiation and configuration of objects, reducing the complexity of manual dependency management. This leads to cleaner and more readable code, as the creation of dependencies is abstracted away from the business logic.

Let’s have a look at the following example. Suppose we have an Android app that displays a list of tasks, and we want to test the TaskListViewModel class responsible for managing tasks. First, let's define the TaskRepository interface and its implementation:

interface TaskRepository {

fun getTasks(): List<Task>

}

class TaskRepositoryImpl @Inject constructor() : TaskRepository {

override fun getTasks(): List<Task> {

}

}

Next, let's create the TaskListViewModel class, which depends on TaskRepository:

class TaskListViewModel @ViewModelInject constructor(

private val taskRepository: TaskRepository

) : ViewModel() {

private val _tasks = MutableLiveData<List<Task>>()

val tasks: LiveData<List<Task>> = _tasks

init {

loadTasks()

}

private fun loadTasks() {

viewModelScope.launch {

_tasks.value = taskRepository.getTasks()

}

}

}

Now, let's write a unit test for the TaskListViewModel class using Hilt for dependency injection:

@HiltAndroidTest

class TaskListViewModelTest {

@get:Rule

var hiltRule = HiltAndroidRule(this)

@Inject

lateinit var testTaskRepository: TaskRepository

@Before

fun setUp() {

hiltRule.inject()

}

@Test

fun testLoadTasks() {

val viewModel = TaskListViewModel(testTaskRepository)

val mockTasks = listOf(Task("Task 1"), Task("Task 2"))

`when`(testTaskRepository.getTasks()).thenReturn(mockTasks)

viewModel.loadTasks()

assertEquals(mockTasks, viewModel.tasks.value)

}

}

This example illustrates the 3 benefits listed above:

-

Improved testability - the DI mechanism allows us to easily mock the TaskRepository class and configure its output according to our needs, so that our tests can verify that ViewModel is behaving properly according to specific input from the TaskRepository mocked class

-

Modular design - The constructor injection used for the TaskRepositoryImpl and TaskListViewModel allows us to flawlessly build a hierarchy of components that are embedded in one another and also swap them for alternative implementations without having to update the hierarchy chain above or below them (for example we can inject any implementation of TaskRepository as long as it conforms to its interface without changing how we use it in TaskListViewModel)

-

Simplified dependency management - Hilt’s DI allows us to request an instance of any class by boiling down the whole hassle around the instantiation to a simple “@Inject” annotation that takes care of the whole process of creating a new instance and feeding it with the required dependencies.

Dependency Injection is a powerful design pattern that enhances the flexibility, testability, and maintainability of software systems. In the context of Android development, DI frameworks like Hilt are indispensable tools for managing dependencies and building robust, scalable apps.

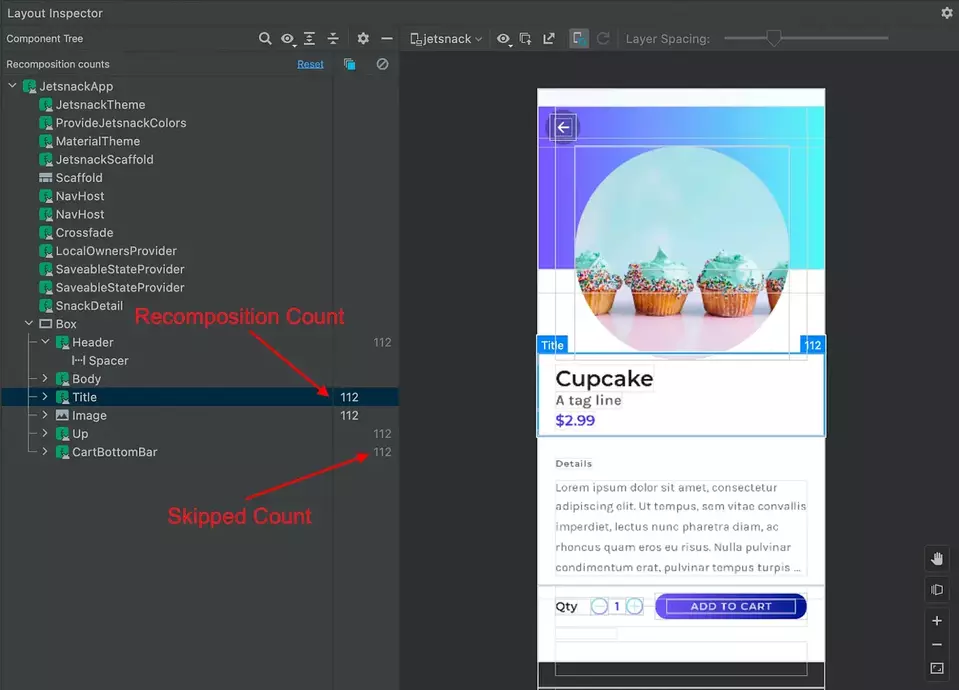

Chapter 2. What is Hilt?

Introduction to Hilt

Hilt is a dependency injection library for Android built on top of Dagger, developed by Google as part of the Android Jetpack libraries. Dagger is a dependency injection framework for Kotlin and Java applications. It helps manage dependencies by automatically providing and managing instances of classes that your application needs. Hilt aims to simplify the implementation of dependency injection in Android apps by providing a set of predefined components and annotations tailored specifically for Android development.

Key Features of Hilt

Integration with Android Components

Hilt seamlessly integrates with Android framework components such as activities, fragments, services, and view models. It provides annotations like @AndroidEntryPoint to mark Android components for injection, simplifying the process of integrating DI into these components.

Simplified Setup

Hilt reduces the setup overhead required to use Dagger for dependency injection in Android projects. Developers no longer need to define custom Dagger components and modules; instead, Hilt generates them automatically based on annotations and conventions.

Annotation-Based Configuration

Hilt uses annotations extensively to configure dependency injection in Android apps. Annotations like @HiltAndroidApp, @Singleton, @ActivityScoped, and @ViewModelInject provide a declarative way to define the scope and lifecycle of dependencies.

Compile-Time Safety

Similar to Dagger, Hilt performs dependency resolution and validation at compile time, ensuring correctness and type safety. This helps catch dependency-related errors early in the development process, reducing the likelihood of runtime issues.

Seamless Integration with Jetpack Libraries

Hilt is designed to work seamlessly with other Jetpack libraries, such as ViewModel, LiveData, and WorkManager. It provides built-in support for injecting dependencies into these components, further simplifying the development of Android apps using Jetpack architecture components.

How Hilt Works

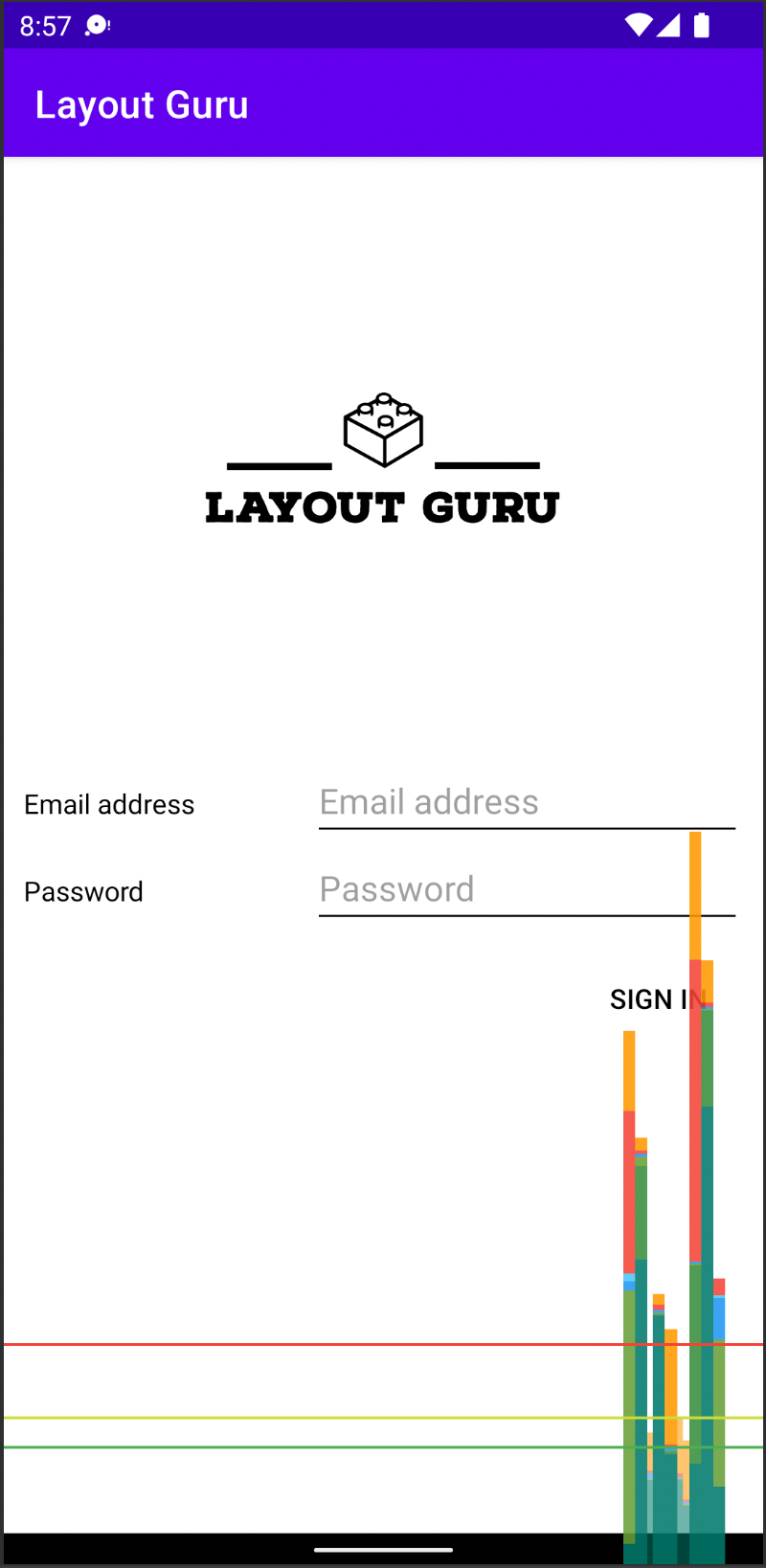

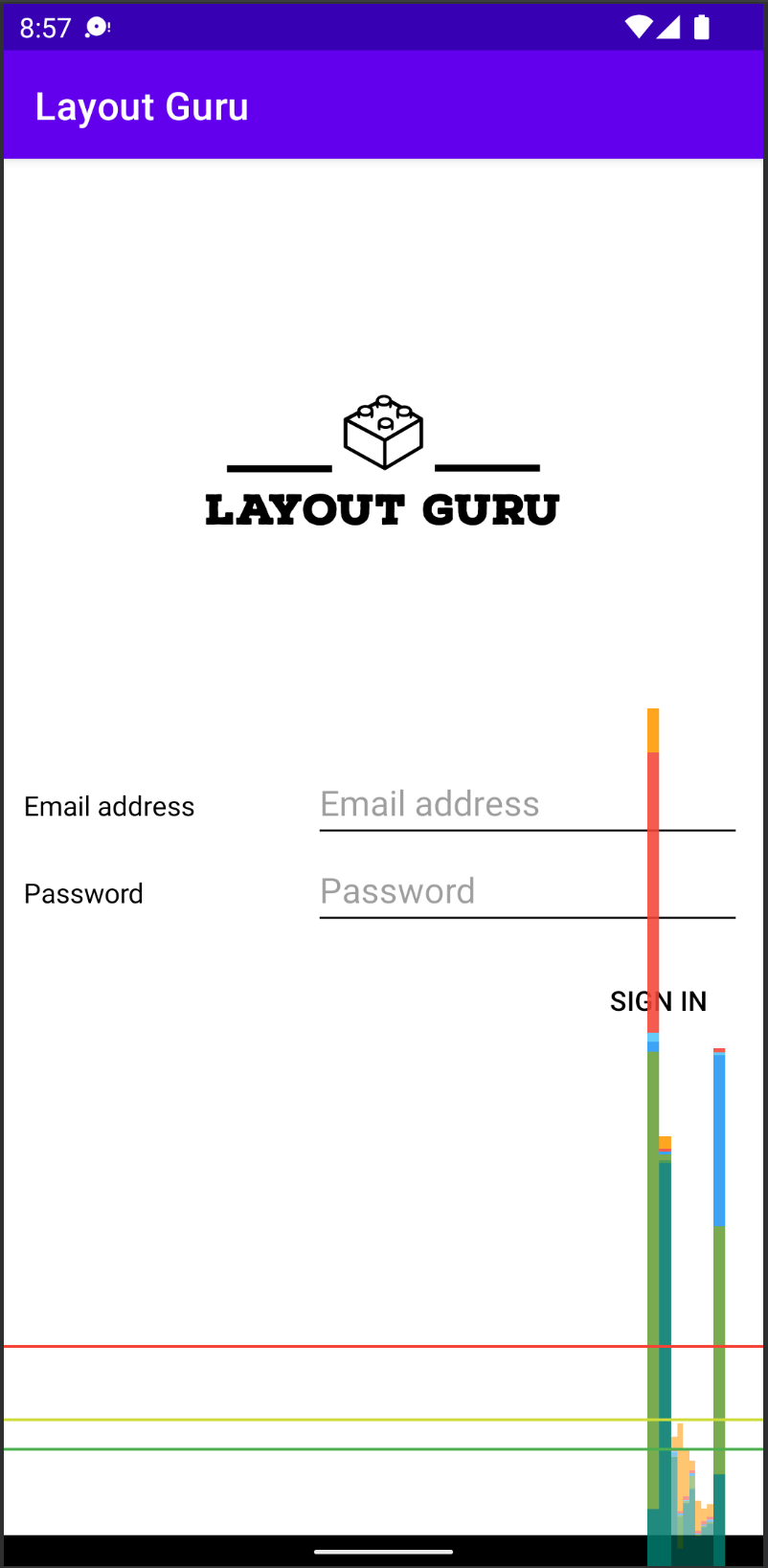

Example given below is implemented on a clean standard new project created via Android Studio’s template.

Adding Hilt to your project

First, we need to add the required dependencies for Dagger Hilt to our project. This is done by adding the following code in the relevant sections indicated in the project’s app-level “build.gradle” file:

plugins {

id 'kotlin-kapt'

id 'com.google.dagger.hilt.android'

}

dependencies {

implementation "com.google.dagger:hilt-android:2.50"

kapt "com.google.dagger:hilt-compiler:2.50"

}

// Allow references to generated code

kapt {

correctErrorTypes true

}

Annotating our Application class

Now we need to annotate our Application class with the relevant annotation. @HiltAndroidApp tells Hilt to generate a base class for our application that serves the dependencies to our Android classes. This is done in the following way:

@HiltAndroidApp

class MyApplication : Application() {

}

Defining a module

A module is a class that serves the dependencies that we need when we need them. We can define a module by creating a new class and adding the annotation @Module on top of it.After that in this module class we implement methods that provide the necessary dependencies. This is how it looks like:

@Module

@InstallIn(ApplicationComponent::class)

object AppModule {

@Provides

fun appModuleDependency(): AppModuleDependency {

return AppModuleDependencyImpl()

}

}

In this example, we defined a module called AppModule that provides a dependency called AppModuleDependency. We also implemented a method called appModuleDependency() that creates and returns an instance of AppModuleDependencyImpl.

Injecting dependencies into Android classes

To inject a dependency into a class we need to annotate this class with @AndroidEntryPoint. This would tell Hilt that it needs to generate the code required to inject dependencies into this class. This is how we do this:

Suppose we have a simple Android app with an activity that displays a list of tasks. We want to use Hilt for dependency injection in our activity to provide instances of ViewModel and other dependencies.

@AndroidEntryPoint

class TaskListActivity : AppCompatActivity() {

@Inject

lateinit var viewModel: TaskListViewModel

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_task_list)

viewModel.getTasks().observe(this, Observer { tasks ->

})

}

}

In this example, TaskListActivity is annotated with @AndroidEntryPoint to indicate that Hilt should perform dependency injection on this activity. This annotation tells Hilt to generate a component and inject dependencies into this activity at runtime. The TaskListViewModel is injected into TaskListActivity using Hilt's automatic injection feature. Additionally, if TaskListViewModel itself has dependencies, they can be injected using constructor injection, and Hilt will handle their instantiation and injection automatically.

If we haven’t used DI for injecting the ViewModel, it’s initialisation would’ve looked something like that (presuming the TaskListViewModel is using a repository to fetch the information and a utility class to parse the list of tasks and return them properly formatted and sorted):

class TaskListActivity : AppCompatActivity() {

var viewModel: TaskListViewModel? = null

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_task_list)

val tasksRepository = TasksRepository()

val tasksFormatter = TasksFormatter()

viewModel = TaskListViewModel(tasksRepository, tasksFormatter)

viewModel.getTasks().observe(this, Observer { tasks ->

})

}

}

As you can see, without DI we are responsible for initializing all dependencies required by TaskListViewModel first and then providing them to the constructor when creating an instance of the ViewModel. You can imagine how messy the code might get to look if TaskListViewModel required more dependencies or if its dependencies had sub dependencies that needed to be initialized beforehand.

Chapter 3. Benefits of Hilt

Hilt, as a dependency injection library for Android development, offers several benefits that enhance the developer experience, improve code quality, and streamline the development process.

Ease of use

Hilt significantly simplifies the setup and usage of dependency injection in Android projects compared to manual configuration with Dagger. Developers no longer need to define custom Dagger components, modules, and subcomponents; instead, they can rely on Hilt's annotations and conventions to handle much of the setup automatically. This reduces the learning curve for developers new to DI and allows them to focus more on writing application logic rather than dealing with DI configuration details.

Reduced boilerplate code

One of the primary benefits of Hilt is its ability to reduce boilerplate code associated with Dagger-based dependency injection. Hilt generates much of the repetitive code required for Dagger setup, including components, modules, and builders, based on annotations and conventions. This not only saves developers time and effort but also leads to cleaner, more concise codebases with fewer manual dependencies to manage.

Compile-Time Safety

Hilt, like Dagger, performs dependency resolution and validation at compile time, ensuring correctness and type safety. By detecting dependency-related errors early in the development process, Hilt helps prevent runtime issues and facilitates smoother debugging. Developers can rely on compile-time checks to catch mistakes such as missing bindings, circular dependencies, or incorrect scope annotations, leading to more robust and stable Android apps.

Integration with Jetpack Libraries

Hilt is designed to seamlessly integrate with other Android Jetpack libraries and components, such as ViewModel, LiveData, and Room. It provides built-in support for injecting dependencies into these components, simplifying the implementation of recommended Android app architectures. Developers can leverage Hilt's annotations and conventions to ensure consistency and compatibility across their entire app architecture, promoting maintainability and scalability.

Scoping and Lifecycle Management

Hilt offers built-in support for scoping and managing the lifecycle of dependencies, ensuring that objects are created and destroyed appropriately based on their scope. Developers can use annotations like @Singleton, @ActivityScoped, or @ViewModelScoped to define the scope of dependencies and let Hilt handle their lifecycle automatically. This helps prevent memory leaks, optimize resource usage, and improve performance in Android apps.

Testing Support

Hilt simplifies testing by providing utilities for injecting test doubles and managing dependencies in test environments. Developers can annotate their test classes with @HiltAndroidTest and use @BindValue or @Module to provide dependencies specific to their test scenarios. This makes it easier to write comprehensive unit tests and integration tests for Android apps, leading to higher code coverage and better overall test quality.

Chapter 4. Scoping and Lifecycle Management

Scoping and lifecycle management are crucial aspects of dependency injection in Android app development. They ensure that objects are created, reused, and destroyed appropriately, optimizing resource usage and preventing memory leaks. In this chapter, we'll explore how Hilt handles scoping and lifecycle management of dependencies in Android apps.

Understanding Scopes in Hilt

Scoping refers to the lifespan of objects managed by Hilt. By defining scopes for dependencies, developers can control when objects are created and destroyed, ensuring that they exist for the appropriate duration and are available when needed.

Singleton Scope

The @Singleton scope in Hilt ensures that a single instance of a dependency is shared across the entire application. Objects annotated with @Singleton are created when the application starts and are reused throughout its lifespan. This scope is typically used for dependencies that are expensive to create or need to be shared globally.

Suppose we have a logging utility class called ShipbookLogger that is used throughout our Android application to log messages to various destinations such as the console, file, and remote server. We want to ensure that there is only one instance of ShipbookLogger created and shared across all components of our application to maintain consistency and optimize resource usage. First, let's define our “ShipbookLogger” class:

@Singleton

class ShipbookLogger @Inject constructor() {

fun log(message: String) {

println("Logging message: $message")

}

}

In this example, we annotate the ShipbookLogger class with @Singleton to indicate that it should be treated as a singleton and only one instance should be created by Hilt and shared across the entire application.

Now, let's use the ShipbookLogger class in various parts of our application. For example, in an activity:

@AndroidEntryPoint

class MainActivity : AppCompatActivity() {

@Inject

lateinit var logger: ShipbookLogger

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_main)

logger.log("MainActivity started")

}

}

In this activity, we inject the ShipbookLogger instance using Hilt's @Inject annotation. Since we've annotated the ShipbookLogger class with @Singleton, Hilt will provide the same instance of ShipbookLogger to all components requesting it throughout the application. Similarly, we can inject the ShipbookLogger instance into other components such as fragments, view models, services, etc., and be assured that they all share the same instance.

Activity Scope

The @ActivityScoped scope in Hilt ensures that a dependency is tied to the lifecycle of an activity. Objects annotated with @ActivityScoped are created when the activity is created and destroyed when the activity is destroyed. This scope is useful for dependencies that are specific to an activity and should be cleaned up when the activity is no longer in use. @ActivityScoped-annotated components are available to all other subcomponents in the activity such as fragments.

Suppose we have an application that supports various interchangeable themes each with its own unique configuration. The light and dark themes are represented by separate activities - LightThemeActivity and DarkThemeActivity respectively. The configuration state of each theme will be tracked by a ThemeStateManager.

@ActivityScoped

class ThemeStateManager @Inject constructor() {

private var themeConfig: ThemeConfig? = null

fun setSelectedThemeConfig(themeConfig: ThemeConfig) {

selectedThemeConfig = themeConfig

}

fun getSelectedThemeConfig(): ThemeConfig? {

return themeConfig

}

}

In this example, ThemeStateManager is annotated with @ActivityScoped to indicate that there should be one instance of this class per activity instance. This ensures that each instance of LightThemeActivity and DarkThemeActivity has its own ThemeStateManager instance, allowing them to maintain separate states of the ThemeConfig for the different themes.

Fragment Scope

The @FragmentScoped scope in Hilt ensures that a dependency is tied to the lifecycle of a fragment. Objects annotated with @FragmentScoped are created when the fragment is created and destroyed when the fragment is destroyed. This scope is similar to @ActivityScoped but applies to fragments instead of activities.

Suppose we have a note-taking app where users can create and edit notes. We want to allow users to open multiple instances of the note editor (NoteEditorFragment) simultaneously, each with its own independent state.

@FragmentScoped

class NoteManager @Inject constructor() {

private val noteContentMap: MutableMap<Int, String> = mutableMapOf()

fun saveNoteContent(noteId: Int, content: String) {

noteContentMap[noteId] = content

}

fun getNoteContent(noteId: Int): String? {

return noteContentMap[noteId]

}

fun deleteNoteContent(noteId: Int) {

noteContentMap.remove(noteId)

}

}

The NoteManager class can be injected into multiple instances of NoteEditorFragment within the app to manage the state of individual notes. Each NoteEditorFragment instance can use its associated NoteManager to save, retrieve, and delete note content independently.

Benefits of Scoping in Hilt

Resource Optimization

By defining appropriate scopes for dependencies, developers can optimize resource usage and prevent memory leaks. Scoped dependencies are created and destroyed as needed, ensuring that resources are released when no longer in use.

Lifecycle Awareness

Scoped dependencies in Hilt are aware of the Android component's lifecycle they're associated with, whether it's an activity, fragment, or application. This ensures that objects are cleaned up properly when their associated component is destroyed, reducing the risk of memory leaks and improving app stability.

Modularization

Scoping allows developers to modularize their codebase and encapsulate dependencies within specific components or features of the app. This promotes code reuse, maintainability, and separation of concerns, making it easier to reason about and maintain the app architecture.

Chapter 5. Testing with Hilt

Testing is a critical aspect of software development, ensuring that code behaves as expected and meets the requirements. Hilt, with its testing support, simplifies the process of writing comprehensive unit tests and integration tests for Android apps.

Overview of Testing with Hilt

Hilt provides utilities and annotations to support testing in Android apps, allowing developers to inject dependencies and manage test environments effectively. Dependency injection (DI) allows developers to focus their tests on the crucial aspects of their business logic providing them with the ability to abstract away mandatory, but unrelated components setup. For example mocking DB connections, remote data sources, etc. and configuring them with specific behavior. With Hilt, developers can write tests that cover various aspects of their app's functionality, including unit tests for individual components and integration tests for larger app features. The following examples will showcase the abstraction of an authentication mechanism and a remote data source allowing developers to focus on the validation of only the upcoming steps from specific outcomes.

Unit Testing with Hilt

Unit testing involves testing individual units or components of code in isolation, typically using mock objects or test doubles for dependencies. Hilt simplifies unit testing by providing utilities to inject mock dependencies into classes under test.

Using @BindValue Annotation

The @BindValue annotation in Hilt allows developers to provide mock implementations of dependencies for testing purposes. By annotating a field or parameter with @BindValue in a test class, developers can replace the actual dependency with a mock object or test double.

Example Unit Test with Hilt with full setup of included dependencies:

Interface representing authentication repository

interface AuthRepository {

fun login(email: String, password: String): Boolean

}

Test class implementing this interface simulating authentication functionality (real implementation will serve this information from an API call verifying the user credentials):

class TestAuthRepositoryImpl : AuthRepository {

override fun login(email: String, password: String): Boolean {

return email == "[email protected]" && password == "password"

}

}

Class that handles login logic, using the authentication repository

class LoginManager @Inject constructor(private val authRepository: AuthRepository) {

fun loginUser(email: String, password: String): Boolean {

return authRepository.login(email, password)

}

}

Unit test class using Hilt

@HiltAndroidTest

class ExampleUnitTest {

@BindValue

lateinit var authRepository: AuthRepository

@Inject

lateinit var loginManager: LoginManager

@get:Rule

var hiltRule = HiltAndroidRule(this)

@Before

fun setup() {

hiltRule.inject()

}

@Test

fun testLoginSuccess() {

`when`(authRepository.login(anyString(), anyString())).thenReturn(true)

val result = loginManager.loginUser("[email protected]", "password")

assertTrue(result)

}

@Test

fun testLoginFailure() {

`when`(authRepository.login(anyString(), anyString())).thenReturn(false)

val result = loginManager.loginUser("[email protected]", "password")

assertFalse(result)

}

}

Integration Testing with Hilt

Integration testing involves testing the interactions between different components or features of an app. Hilt simplifies integration testing by providing utilities to initialize test environments and inject dependencies into Android components.

Using @HiltAndroidTest Annotation

The @HiltAndroidTest annotation in Hilt marks a test class as an Android instrumentation test and allows Hilt to initialize the test environment with dependency injection capabilities. Test classes annotated with @HiltAndroidTest can inject dependencies into Android components such as activities, fragments, and view models.

Example Integration Test with Hilt with full setup of included dependencies:

Interface representing an abstract data repository

interface DataRepository {

suspend fun fetchData(): List<Item>

}

Test class implementing repository that fetches data from a remote source(real implementation will serve this information from a real database):

class TestRemoteDataRepository : DataRepository {

override suspend fun fetchData(): List<Item> {

return listOf(Item("Item 1"), Item("Item 2"), Item("Item 3"))

}

}

A ViewModel class using this remote data source to fetch and expose data

class MainViewModel @ViewModelInject constructor(private val dataRepository: DataRepository) : ViewModel() {

private val _items = MutableLiveData<List<Item>>()

val items: LiveData<List<Item>> = _items

init {

viewModelScope.launch {

_items.value = dataRepository.fetchData()

}

}

}

An Activity observing the ViewModel and displaying the exposed list of items

@AndroidEntryPoint

class MainActivity : AppCompatActivity() {

private val viewModel: MainViewModel by viewModels()

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_main)

viewModel.items.observe(this, Observer { items ->

})

}

}

Test case validating the behavior of the components

@HiltAndroidTest

class ExampleIntegrationTest {

@Inject

lateinit var dataRepository: DataRepository

@Inject

lateinit var viewModel: MainViewModel

@get:Rule

var hiltRule = HiltAndroidRule(this)

@Before

fun setUp() {

hiltRule.inject()

}

@Test

fun testActivityBehavior() {

val scenario = launchActivity<MainActivity>()

scenario.onActivity { activity ->

assertNotNull(activity)

}

}

@Test

fun testViewModelBehavior() {

runBlocking {

val items = viewModel.items.getOrAwaitValue()

assertNotNull(items)

assertTrue(items.isNotEmpty())

}

}

}

Best Practices for Testing with Hilt

When writing tests with Hilt, developers should adhere to the following best practices:

Use Mock Objects for Dependencies

In unit tests, use mock objects or test doubles to simulate the behavior of dependencies and isolate the code under test. This allows developers to verify the functionality of individual components in isolation without relying on real dependencies.

Keep Tests Fast and Independent

Make sure tests are fast-running and independent of each other to facilitate quick feedback and maintainability. Minimize dependencies between tests and use techniques like test parallelization to speed up test execution.

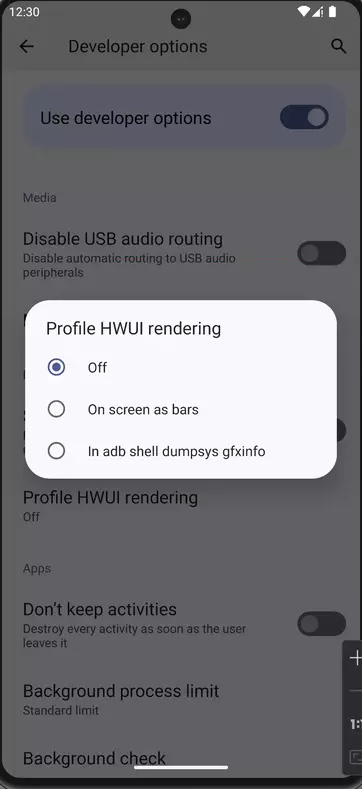

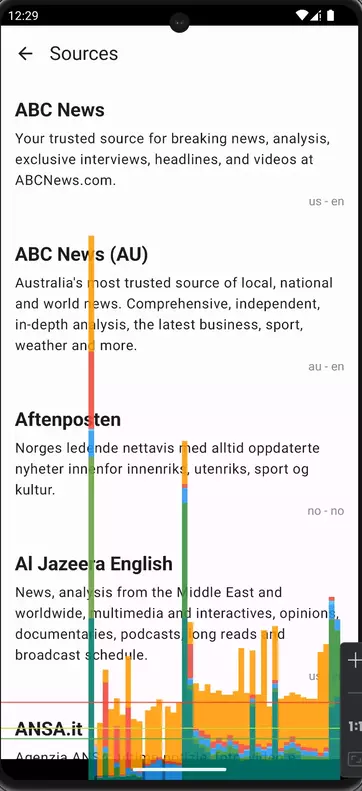

In Android app development, performance is a critical aspect that directly impacts user experience and app usability. When using Hilt for dependency injection, it's essential to consider performance implications to ensure that the app remains responsive and efficient. In this chapter, we'll explore various performance considerations when using Hilt in Android apps.

Overhead of Reflection

One potential performance concern when using Hilt (and Dagger) is the overhead introduced by reflection. Both Hilt and Dagger rely on reflection to generate and instantiate components and dependencies dynamically at runtime. While reflection offers flexibility and convenience, it can impact app startup time and memory usage, especially on older devices or devices with limited resources.

Mitigation Strategies

-

Proguard/R8 Optimization: Enable Proguard or R8 obfuscation and optimization to reduce the size of the generated code and remove unused code paths. This can help minimize the impact of reflection on app startup time and memory footprint. To enable shrinking, obfuscation, and optimization, include the following in your project-level build script:

android {

buildTypes {

getByName("release") {

isMinifyEnabled = true

}

}

...

}

-

Ahead-of-Time (AOT) Compilation: tools like Dagger's Ahead-of-Time (AOT) compiler generate static component implementations at compile time. AOT compilation reduces the reliance on reflection at runtime, resulting in faster startup times and improved performance.

-

Minimize Component Size: Keep Dagger/Hilt component sizes small by avoiding unnecessary dependencies and modularizing your codebase. Smaller components reduce the amount of reflection needed during initialization, leading to faster startup times and reduced memory overhead.

Eager Initialization

Another performance consideration with Hilt is the eager initialization of dependencies by default. In some cases, eagerly initializing all dependencies at startup can lead to unnecessary overhead, especially if certain dependencies are rarely used or only needed in specific scenarios.

Mitigation Strategies

-

Lazy Loading: Use lazy initialization techniques to defer the creation of dependencies until they are actually needed. This can help reduce startup time and memory usage by delaying the instantiation of less critical dependencies until they are requested by the app.

class MainActivity : AppCompatActivity() {

private val retrofitService: RetrofitService by lazy {

Retrofit.Builder()

.baseUrl(BASE_URL)

.addConverterFactory(GsonConverterFactory.create())

.build()

.create(RetrofitService::class.java)

}

}

-

Custom Scoping: Implement custom scoping mechanisms to control the lifecycle of dependencies more granularly. By defining custom scopes for different parts of the app, developers can ensure that dependencies are initialized only when required and released when no longer needed, minimizing resource usage and improving performance.

Memory Management

Effective memory management is crucial for maintaining optimal app performance, particularly on resource-constrained devices such as older smartphones or tablets. With dependency injection, it's important to ensure that objects are appropriately garbage-collected when no longer in use to prevent memory leaks and excessive memory consumption.

Mitigation Strategies

-

Scoped Lifecycle Management: Leverage Hilt's built-in support for scoping and lifecycle management to control the lifespan of dependencies. By associating dependencies with specific scopes (e.g., activity scope, fragment scope), developers can ensure that objects are cleaned up when their associated component is destroyed, reducing the risk of memory leaks.

-

Weak References: Consider using weak references for long-lived dependencies or objects that need to be accessed across different parts of the app. Weak references allow objects to be garbage-collected when they are no longer strongly referenced, helping to free up memory and prevent memory leaks.

val person = Person("Boris)

val personWeakReference = WeakReference<Person>(person)

Testing Impact

When considering performance, it's also essential to evaluate the impact of Hilt on testing. While dependency injection frameworks like Hilt facilitate testing by providing utilities for injecting test doubles and managing dependencies in test environments, they can also introduce overhead in test setup and execution.

Mitigation Strategies

- Isolation of Test Scenarios: Identify and isolate critical test scenarios that require dependency injection and focus on optimizing the performance of these tests. Use Hilt's testing support to provide mock or test double implementations of dependencies and avoid unnecessary overhead in test setup.

- Test Suite Optimization: Optimize test suites by grouping tests with similar dependencies and minimizing the number of redundant injections. Consider using dependency injection frameworks' features such as test modules or custom test scopes to streamline test setup and reduce overhead.

Chapter 7. Conclusion

Dependency injection is a powerful technique in Android app development for managing dependencies, improving code maintainability, and facilitating testing. With the introduction of Hilt, developers now have a streamlined and developer-friendly solution for implementing dependency injection in their Android apps.

As developers continue to build complex and feature-rich Android apps, tools like Hilt play a crucial role in ensuring code quality, scalability, and maintainability. By adopting Hilt in their projects, developers can leverage the benefits of dependency injection while minimizing the associated overhead and complexity. And the more feature-rich your app grows, the more the need for an adequate logging tool arises. This is where Shipbook steps in to help alleviate the pain around constantly digging into the logs during debugging.